In the ever-evolving digital landscape, search engines are at the heart of how users find information. Whether you’re a website owner, content creator, marketer, or just a curious digital enthusiast, understanding how search engines operate is essential. This knowledge can help you navigate the complexities of online visibility and optimize your content to rank higher in search engine results. In this article, we will delve into the three key processes that drive search engines: crawling, indexing, and ranking. These processes are integral to search engine optimization (SEO), particularly when aiming for fast search engine optimization to boost your website’s visibility and performance.

What is a Search Engine?

A search engine is a software system designed to retrieve data from the internet based on user queries. The most popular search engines today are Google, Bing, and Yahoo, with Google leading the pack, handling more than 90% of global searches. Search engine provide an incredibly valuable service by allowing users to find information quickly from the vast pool of content available online.

At the core of every search engine lie three primary components: crawlers, indexing, and ranking algorithms. Together, these elements determine how websites and content are discovered, organized, and presented to users.

Crawling: The First Step in Search Engine Operations

The crawling process refers to the method by which search engines discover content on the internet. A search engine crawler, often referred to as a bot or spider, is a program that systematically navigates the web by following hyperlinks from one page to another. This enables crawlers to find new pages and return to previously visited pages to check for updates.

Crawlers work tirelessly, traversing millions of pages to keep the search engine’s database up-to-date. However, they do not crawl all pages on the internet, nor do they visit pages in any arbitrary order. Instead, they prioritize which pages to crawl based on several factors, such as:

- Page popularity: Websites with many inbound links (backlinks) or higher traffic are crawled more often.

- Freshness of content: New or updated pages are prioritized for crawling to keep the search engine’s index current.

- Site structure: Well-organized websites with clear navigation and an XML sitemap are easier for crawlers to navigate.

Site owners can control the crawling process to some extent by using a robots.txt file, which provides instructions to crawlers on which pages they should or should not visit. However, blocking crawlers from indexing important pages could negatively impact a site’s search engine optimization (SEO). Additionally, XML sitemaps help crawlers discover and prioritize critical pages on a site.

One challenge with crawling is dynamic content, such as JavaScript-heavy pages or content behind login forms. These types of pages can be difficult for crawlers to access. As a result, it’s important for website owners to ensure that search engines can effectively crawl all the key content on their site.

Indexing: Organizing the Web’s Content

Once crawlers have discovered web pages, the next step is indexing. In simple terms, indexing is the process by which a search engine organizes the data it has crawled. This process involves storing and categorizing content based on various parameters such as keywords, relevance, and content type.

After crawling a page, the search engine looks at different aspects of the page to understand what it’s about. Some key elements considered during indexing include:

- Text content: The words on the page, including headings, body text, and metadata, are analyzed to understand the context.

- Images and videos: Search engines use image recognition technologies to index media files and link them to relevant keywords.

- Meta tags: Metadata, including title tags, description tags, and alt text for images, provides further context about the content.

- URL structure: Clean, descriptive URLs help search engines understand the page’s content and relevance to search queries.

At this stage, a search engine uses algorithms to assess how useful or relevant the content is. High-quality, original content tends to be indexed more favorably than duplicate or low-quality content. Some content may even be excluded from indexing if it violates search engine guidelines. For example, pages that contain spammy, low-value content, such as thin content or keyword stuffing, may be penalized or ignored.

Fast Search Engine Optimization (SEO) strategies focus on ensuring that pages are indexed quickly and accurately. This can be achieved by optimizing content with targeted keywords, ensuring fast page load times, and improving website architecture for easy crawling and indexing.

Ranking: How Search Engines Determine What to Show First

After crawling and indexing, the next step is ranking. Ranking is the process by which search engines determine the order in which pages appear in search results. Search engine algorithms rank pages based on their relevance and authority, using a variety of factors to determine which pages best answer a user’s query.

The ranking factors can be grouped into several broad categories:

- Relevance to the search query: The content must closely match the user’s search intent. This involves keyword usage, but also contextual relevance, such as semantic understanding and natural language processing.

- Backlinks: One of the most important ranking factors, backlinks are links from other websites that point to your content. The number and quality of backlinks are indicators of your site’s authority and trustworthiness.

- User experience (UX): Search engines want to provide the best experience for users. This includes mobile-friendliness, fast loading times, easy navigation, and a secure connection (HTTPS).

- On-page SEO: Properly optimizing your on-page elements—such as titles, headings, and images—improves your page’s chances of ranking higher.

- Content quality: Comprehensive, engaging, and well-structured content that answers user queries tends to rank better than thin or poorly written content.

In recent years, search engines have increasingly incorporated machine learning and artificial intelligence to refine ranking processes. Algorithms like Google’s RankBrain and BERT analyze queries more effectively, taking into account the meaning and intent behind the search, rather than relying solely on keyword matches.

Understanding the Search Engine Result Pages (SERPs)

Search engine result pages (SERPs) are the pages displayed by search engines after a user submits a query. These pages contain both organic (non-paid) results and paid advertisements. The structure of the SERP can vary depending on the type of query and the search engine’s features.

Key elements of the SERP include:

- Organic results: These are the unpaid, algorithmically ranked results that appear based on relevance and authority.

- Featured snippets: These are short, concise answers that appear above the regular search results, providing immediate value to the user.

- Local results: For location-based searches, search engines display businesses and services relevant to the user’s geographic area.

- Rich snippets: These include additional information such as star ratings, product prices, and reviews, which enhance the visibility of the page.

The position your website occupies on the SERP is directly influenced by your ranking factors. Websites that rank higher in the results typically receive more clicks, which is why SEO is crucial to improving your visibility and driving organic traffic.

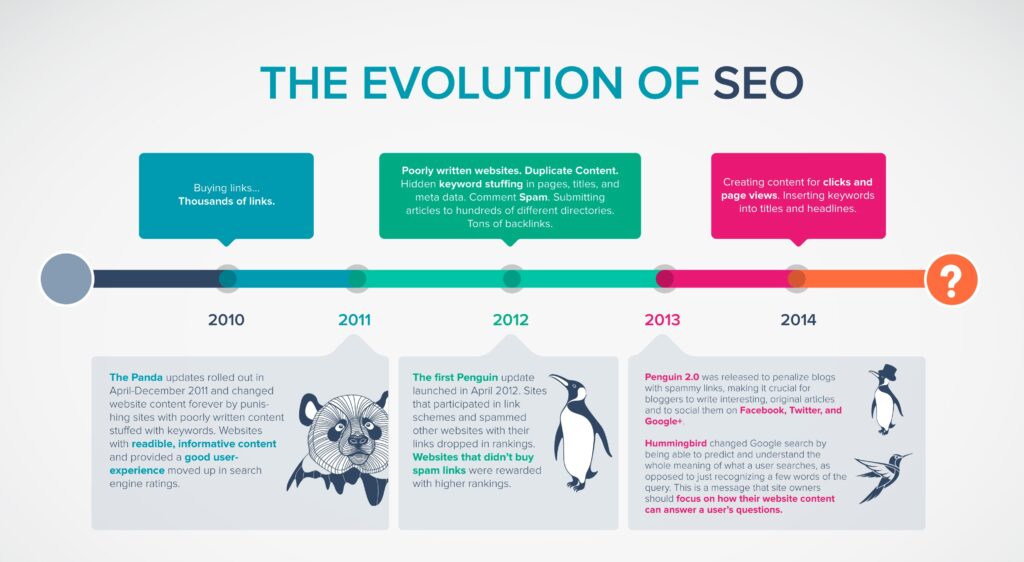

The Evolution of Search Engine Algorithms

Search engine algorithms have undergone significant changes over the years. Early algorithms were largely based on simple keyword matching, but with the rise of spammy SEO practices, search engines became more sophisticated.

Google’s major algorithm updates include:

- Panda: Aimed at reducing the ranking of low-quality, thin content.

- Penguin: Targeted websites with spammy backlink profiles.

- Hummingbird: Focused on improving semantic search and understanding user intent.

- RankBrain: A machine learning-based algorithm that helps Google understand more complex queries.

- BERT: Focuses on natural language processing to understand the context of a search query.

Each update has refined how search engines interpret content, prioritize relevance, and rank pages. The evolution of these algorithms emphasizes the need for high-quality, user-focused content and technical SEO practices.

Best Practices for Optimizing Websites for Crawling, Indexing, and Ranking

To optimize your website for fast search engine optimization, consider the following best practices:

- Keyword research: Identify relevant keywords for your target audience and optimize your content around them.

- On-page SEO: Optimize titles, headings, URLs, and metadata. Ensure that content is well-structured and answers user queries.

- Technical SEO: Improve site speed, make sure your website is mobile-friendly, and create an XML sitemap to guide crawlers.

- Content quality: Publish high-quality, engaging, and original content that provides value to your audience.

- Backlinks: Build high-quality backlinks from reputable sites in your industry to improve your site’s authority.

- Monitor performance: Use tools like Google Search Console and Google Analytics to track your site’s performance and make data-driven improvements.

Conclusion

Search engines play a critical role in helping users find relevant content quickly. Understanding the intricacies of how search engines crawl, index, and rank pages is essential for anyone looking to improve their website’s visibility. By mastering these processes and implementing best practices for fast search engine optimization, website owners can ensure their content ranks higher in search results, driving more traffic and boosting online success